The availability of deep learning frameworks like PyTorch or JAX has revolutionized array processing, regardless of whether one is working on machine learning tasks or other numerical algorithms. The Haskell library ecosystem has been catching up as well, and there are now multiple good array libraries. However, writing high-performance array processing code in Haskell is still a non-trivial endeavor.

If you are struggling with getting your computation to run on a GPU, or have a piece of code that isn’t easily expressed with massiv’s or Accelerate’s builtins, or fail at making LLVM autovectorize your loop, the halide-haskell library might be for you.

Why is there no NumPy analogue in Haskell?

Let’s start by discussing why, in 2023, there’s still no Haskell library that is competitive with NumPy. The short answer is that Haskell is mainly driven by the open source community, and building such a library takes time… a lot of time.

For the longer answer, suppose you have an array data type, say Vector from

the vector library, and now wish

to implement some operations with it. Your vector is actually a representation

of pixels in an image (that we’ve first flattened) and the first function that

you’d like to implement is brightening. Trivial:

brighten :: Float -> Vector (Word8, Word8, Word8) -> Vector (Word8, Word8, Word8)

brighten factor image = map (\(r, g, b) -> (scale r, scale g, scale b)) image

where

scale = round . (min 255.0) . (factor *) . fromIntegralFor each color component in a pixel, you convert it to a Float, multiply it

by some factor, and then cast it back to Word8 making sure it doesn’t

overflow.

The vector library has great fusion support, so the above code doesn’t allocate

intermediate vectors and is quite fast, but what if you need it to be even

faster? After fighting with GHC for a while, you give up and rewrite the

brighten function in C1.

void brighten(float const factor, ptrdiff_t const length,

uint8_t const* src, uint8_t* dest) {

for (ptrdiff_t x = 0; x < length; ++x) {

for (ptrdiff_t c = 0; c < 3; ++c) {

dest[3 * x + c] =

(uint8_t)roundf(fminf(255.0f, factor * (float)src[3 * x + c]));

}

}

}Then, you rewrite the function again, but now using SSE intrinsics, then AVX, AVX2, AVX512. Then your Mac users complain and you add a NEON implementation. You see where we’re going with this: by the time you’re done, you have a multitude of functions that you need to test, profile, and maintain. And now consider that you’d have to do the same for hundreds if not thousands of functions2…

The solution that’s becoming more and more popular in the recent years is just-in-time (JIT) compilation3. It lets us:

- optimize code for the hardware that our program is running on (i.e. no more need to implement separate AVX, AVX2, AVX512, etc. versions of the same function);

- reduce the number of functions that we need to support, because we give domain experts the tools to implement their custom operations (instead of trying to foresee all use cases and support all domains).

Enter Halide

In the Haskell library ecosystem, there is a well-established project that relies on JIT compilation to generate high-performance kernels — Accelerate. It’s a great library, but there are a few cases where you might want an alternative:

- you already have your own array data type, and the performance and ergonomics of back and forth conversion between that array type and Accelerate’s are unacceptable to you;

- you have an operation that Accelerate cannot optimize well enough4;

If any of the above applies to you, the Halide domain specific language might be for you. It supports an even wider range of platforms than Accelerate, and being a lower-level language than Accelerate, it gives you the tools to squeeze the very best performance out of your kernels. There’s just one problem: it’s a C++ library, and it’s mainly meant to be used from C++.

halide-haskell solves that

problem for you. It’s a Haskell library that lets you express your

computational kernels in pure Haskell, then calls down to Halide to JIT compile

and execute the kernels. For example, here’s how we can implement the

brighten function in halide-haskell:

brighten :: Expr Float -> Parameter 2 Word8 -> IO (Function 2 Word8)

brighten factor input = do

[x, c] <- mapM mkVar ["x", "c"]

let value = cast . min 255 . (factor *) . cast $ input ! (c, x)

define "brighten" (c, x) valueIf you want to follow along and try to compile the code, you will need Nix, and there are some installation instructions in the README.md. Nix is needed to simplify the installation and patching of system dependencies (not all our patches have been merged upstream yet).

Basic building blocks

Let’s slowly go through the code and explain the concepts that are essential to

understand and write halide-haskell pipelines. Expr Float is a scalar

expression of type Float. You can use Expr a similarly to normal numeric

types — they have instances for Num, Floating, etc. When we execute

Haskell code with Exprs, it doesn’t compute anything yet. Instead, it builds

an AST (Abstract Syntax

Tree) that Halide can then

use to generate efficient machine code.

The next level of abstraction on top of scalar expressions are array

expressions. These are represented by the GADT Func:

data Func (t :: FuncTy) (n :: Nat) (a :: Type)

data FuncTy = FuncTy | ParamTy

type Function n a = Func 'FuncTy n (Expr a)

type Parameter n a = Func 'ParamTy n (Expr a)So Func t n a is an n-dimensional array expression with the scalar type

a. The type variable t specifies whether we’re dealing with a Parameter or

a Function5. Parameters are arrays that our pipeline receives as input, and

Functions are arrays that our pipeline produces (these can be both

intermediate results and output buffers). We use the operator ! to access an

array at a given index, like in input ! (c, x). This is actually one of

the benefits of halide-haskell compared to the C++ interface of Halide: in C++,

the code input[c] would’ve compiled cleanly and failed at runtime, whereas in

Haskell we check at compile time that the number of tuple elements in (c, x)

matches the number of dimensions of input.

We have our scalar, arrays, and we know how to index into arrays, the only

remaining component are loops. So how do you write loops in halide-haskell? You

don’t! They are implicit. So when we write define "brighten" (c, x) value,

Halide automatically transforms it into something like:

for x in ...

for c in ...

brighten[c, x] = valueThat means that we don’t need to worry about loop bounds — they are inferred automatically by Halide; but since we’ve given our loop variables names, we have a way to refer to specific loop levels and tell Halide to perform transformations on the loops such as reordering, parallelization, vectorization etc.

These concepts should be sufficient to understand the definition of the

brighten function above. For more information about specific functions, have

a look at the documentation.

Running the pipelines

Now that we have a function that builds the AST, we can use the compile

function to turn it into an runnable Haskell function:

ghci> :t compile brighten

compile brighten

:: IO

(Float

-> Ptr (HalideBuffer 3 Word8)

-> Ptr (HalideBuffer 3 Word8)

-> IO ())and then invoke like this:

kernel <- compile brighten

withHalideBuffer inputArray $ \input ->

withHalideBuffer outputArray $ \output ->

kernel 1.5 input outputwhere we’re using the withHalideBuffer function to treat any array type that

is an instance of IsHalideBuffer as a Ptr (HalideBuffer n a):

ghci> :t withHalideBuffer

withHalideBuffer

:: IsHalideBuffer t n a =>

t -> (Ptr (HalideBuffer n a) -> IO b) -> IO bThe HalideBuffer type is very generic such that it’s possible to interoperate

with different array data types. For instance, let’s take the Image type from

the JuicyPixels library.

Here’s how one could implement an instance of IsHalideBuffer for it:

instance (Pixel a, r ~ PixelBaseComponent a, IsHalideType r)

=> IsHalideBuffer (Image a) 3 r where

withHalideBufferImpl :: Image a -> (Ptr (HalideBuffer 3 r) -> IO b) -> IO b

withHalideBufferImpl im action =

unsafeWith im.imageData $ \cpuPtr ->

bufferFromPtrShape cpuPtr shape action

where

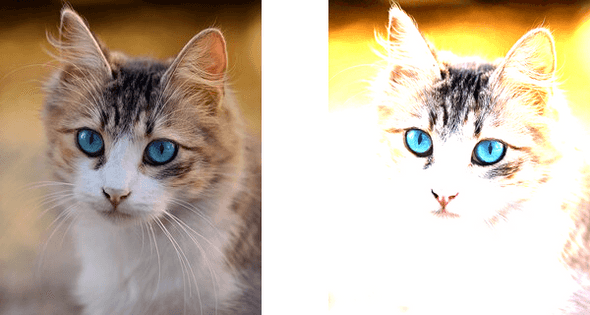

shape = [componentCount (undefined :: a), im.imageWidth, im.imageHeight]If we do the same for the MutableImage type, we can actually test our

pipeline on a real image:

-- Compile the kernel

kernel <- compile brighten

-- Load an image from file

readImage "test/cat.jpg" >>= \case

Right image -> do

let rgb@(Image width height _) = convertRGB8 image

output <- newMutableImage width height

-- We tell halide-haskell to treat images as buffers

withHalideBuffer @3 @Word8 rgb $ \input ->

withHalideBuffer @3 @Word8 output $ \output' ->

-- Execute the kernel

kernel 2.5 input output'

-- Save the result

savePngImage "test/brighter_cat.png" . ImageRGB8

=<< unsafeFreezeImage output

Left e -> error eThe IsHalideBuffer instances for Image and MutableImage are actually

available in the halide-JuicyPixels

library,

so there’s no need to write them by hand. If images aren’t your cup of tea and

you prefer numerics, we also have instances for the Array type from

arrayfire in the

halide-arrayfire

package.

More instances are coming and your contributions are welcome!

The road there

Now that we’ve discussed some high-level concepts of halide-haskell, let’s talk about the elephant in the room — the FFI (Foreign Function Interface). As we’ve mentioned before, halide-haskell is a wrapper around the Halide C++ library, but why? Isn’t it cleaner (and safer) to have a pure Haskell implementation?

Well, Halide’s code has around 150,000 lines of C++ code in the src/ directory

and another 50,000 lines in tests. It would’ve taken us years to reimplement it

all in Haskell, so we’ve decided to take a shortcut and interface with Halide

via the FFI instead.

There are two main difficulties to overcome:

- C++ name mangling.

- Conceptual differences between Haskell and C++ such as function and operator overloading.

We won’t give you a recipe for solving these difficulties, but will discuss some of the tricks that we use in halide-haskell, and perhaps they can serve as an inspiration for your next project.

Safely calling out to C++

For a short project it’s very unlikely that you’d want to implement C++‘s name mangling manually, so there are three approaches that we’re familiar with that let you invoke a C++ function from Haskell:

-

Create a helper

.cppfile and define wrapper functions with C linkage for all C++ functions that you intend to use. An example can be seen in the souffle-haskell library and the corresponding blog post. -

Rely on TemplateHaskell to do the name mangling and directly import a C++ function with

foreign import. The mangle library lets you accomplish that. -

Rely on TemplateHaskell to generate both the wrapper functions and the

foreign importstatements. This can be done with the inline-c-cpp library at the expense of a slightly awkward syntax.

For halide-haskell, we’ve decide to go with the third option for one simple

reason: safety. It’s been advertised

before that one

should use the CApiFFI extension when writing the foreign import

statements. However, CApiFFI does not catch all mistakes, especially when it

comes to constness and pointer casting. In halide-haskell, we’re relying on

the FFI a lot — there are around 200 foreign import declarations — so we

let the inline-c-cpp library generate everything and use the host C++ compiler

to do the checking.

When writing the FFI wrappers, I’ve found it useful to define a Template Haskell function such as:

importHalide :: DecsQ

importHalide =

concat

<$> sequence

[ C.context =<< halideCxt

, C.include "<Halide.h>"

, defineExceptionHandler

]that sets up everything related to inline-c-cpp. This function lives in the

Language.Halide.Context module and can also contain other Template Haskell

magic. The other modules then simply put importHalide somewhere close to the

top of the file, and can afterwards freely refer to Halide’s C++ types in the

inline-c blocks.

inline-c-cpp lets you define a mapping between C/C++ types and Haskell types, e.g.:

cppTypePairs

[ ("Halide::Expr", [t|CxxExpr|])

, ("Halide::Var", [t|CxxVar|])

, ("Halide::RVar", [t|CxxRVar|])

]However, that means that the CxxExpr type needs to be defined before we

define the importHalide function. But what if you want to use inline-c blocks

to define instances for CxxExpr? You now necessarily have to put them in

different modules which leads to orphan instances. The solution that we came up

with is to define two helper functions:

optional :: (CIdentifier, String) -> Q [(CIdentifier, TypeQ)]

optional (cName, hsName) = do

hsType <- lookupTypeName hsName

pure $ maybe [] (\x -> [(cName, pure (ConT x))]) hsType

optionals :: [(CIdentifier, String)] -> Q [(CIdentifier, TypeQ)]

optionals pairs = concat <$> mapM optional pairsOur type mappings are then defined as:

cppTypePairs

<$> optionals

[ ("Halide::Expr", "CxxExpr")

, ("Halide::Var", "CxxVar")

, ("Halide::RVar", "CxxRVar")

]The cool part is that now, CxxExpr doesn’t have to be defined in the

Language.Halide.Context module, i.e. the following is valid:

module Language.Halide.Expr where

import Language.Halide.Context

data CxxExpr

importHalide

-- rely on the CxxExpr <-> Halide::Expr mapping hereThere are a few other tricks that are used to simplify writing the FFI code, but we won’t bore you with details — feel free to browse the halide-haskell codebase for more information.

After the helper functions had been defined, the process of writing the bindings actually went very smoothly. Very rarely did we encounter issues related to the interop with C++, and when we did, they often turned out to be bugs in the dependencies rather than our code.

Type-level magic

Since Halide first builds an AST and only then compiles and runs it, it can do quite a bit of error and bounds checking during compilation. However, since Halide’s “compile time” is still the “run time” for our Haskell code, all Halide errors result in exceptions. In Haskell it’s more common to try to rely on the type system to catch as many errors as possible during compilation, and in halide-haskell we follow that approach and attempt to build a “safer” interface for Halide than the original C++ API. Let’s consider an example.

Since array indexing happens very often when using halide-haskell, we wanted to

provide as clean a syntax for it as possible in Haskell. You write arr ! x in

the one dimensional case and arr ! (x, y, z) in the many-dimensional case. So

how do we teach Haskell that arr ! x is valid only when arr is

one-dimensional? In halide-haskell, we define the following type family:

type family UniformTuple (n :: Nat) (t :: Type) = (tuple :: Type) | tuple -> n where

UniformTuple 0 t = ()

UniformTuple 1 t = Expr t

UniformTuple 2 t = (Expr t, Expr t)

UniformTuple 3 t = (Expr t, Expr t, Expr t)

...and use functional dependencies to specify that the number of dimensions n is

deducible from the tuple type. The problem is that working with tuples in

Haskell is a bit messy since you can’t build them up recursively or iterate

over them. To support such operations, we define a heterogeneous list data

type:

data Arguments (k :: [Type]) where

Nil :: Arguments '[]

(:::) :: t -> (Arguments ts) -> Arguments (t ': ts)and then define a type class that lets us convert back and forth between

between tuples and Arguments (i.e. essentially an isomorphism):

class (ToTuple a ~ t, FromTuple t ~ a) => IsTuple a t | a -> t, t -> a where

toTuple :: Arguments a -> t

fromTuple :: t -> Arguments aThen, by using the Dict type from the constraints

package, we can prove to GHC

that e.g. every UniformTuple does in fact have an instance of IsTuple, or

that all types in the UniformTuple are the same. The implementation isn’t

pretty, but in the end we are able to define the indexing operator as:

(!) :: (HasIndexType n, IsFuncDefinition a) => Func t n a -> IndexType n -> a

(!) func args = ...And now all these variants of usage are valid:

let e1 = arr1 ! x

e2 = arr2 ! (x, y, z)

(e3, e4) = arr3 ! (y, z)For other type-level tricks we again refer the reader to the halide-haskell

codebase, especially the implementation of the

compile

function may be of interest.

Reimplementing a Numba kernel with halide-haskell

Since I’m pursuing a Ph.D. in computational physics and do a lot of numerical computing, I’ve asked my fellow scientists for examples where a library like NumPy wasn’t enough (most of them are using Python), and they had to resort to manually writing kernels in e.g. Numba. The most common response was that they’re actually quite happy with NumPy and never had to go beyond it, but we did receive a few interesting examples. Let’s take one and reimplement it in halide-haskell (for a more complicated example, see this repository).

Our goal is to implement equations (10)-(12) from this paper. Here they are (note, that you don’t need to understand their origin or the maths behind them to follow along):

Essentially, we are computing some matrix bk,n from a vector ak in the following way:

- Compute the first column (n=0) using an explicit equation;

- Compute the second column (n=1) as a function of the first;

- Every other column n+1 is a function of two previous ones n−1 and n.

One other thing that we should keep in mind is that the recursion is unstable for the upper triangular part of b. To compute it, we can use the following transpose relation:

Without further ado, here is the implementation for the first column:

# Python (Numba)

N = a.shape[0]

b[0, 0] = s * a[0] - a[1] / 3.

for k in range(1, N - 1):

b[k, 0] = a[k - 1] / (2.*k - 1.) \

- a[k + 1] / (2.*k + 3.)

b[N - 1, 0] = a[N - 2] / (2.*(N - 1) - 1.)-- Haskell (halide-haskell)

k <- mkVar "k"

n <- mkVar "n"

mkColumn0 <- compile $ \s a' -> do

a <- constantExterior 0 a'

define "column0" (k, n) $

ifThenElse (k `eq` 0) (s * a ! k) 0

+ a ! (k - 1) / (2 * cast k - 1)

- a ! (k + 1) / (2 * cast k + 3)The code is very similar, except that in Numba we have to manually split the

loop to handle the boundaries properly. halide-haskell provides the

constantExterior function that extends the function domain using the

specified constant. At compile time, Halide then automatically performs the

loop splitting. I.e. once you get used to the builtin functions, the

halide-haskell code is actually simpler without sacrificing performance.

The ifThenElse function is halide-haskell’s analogue of the standard

if _ then _ else _ construct, but lifted to work with Expr types:

ifThenElse :: IsHalideType a => Expr Bool -> Expr a -> Expr a -> Expr aThe second column is implemented pretty similarly, so let’s jump directly to the recursive case. In Python, it looks like this:

for n in range(1, N - 1):

for k in range(n + 1, N - 1):

b[k, n + 1] = -(2.*n + 1.) / (2.*k + 3.) * b[k + 1, n] \

+ (2.*n + 1) / (2.*k - 1.) * b[k - 1, n] + b[k, n - 1]

k = N - 1

b[N - 1, n + 1] = (2.*n + 1) / (2.*k - 1.) * b[k - 1, n] + b[k, n - 1]

for k in range(N - 1):

for n in range(N):

b[k, n] = (-1)**(n + k) * (2.*k + 1.) / (2.*n + 1.) * b[n, k]Note how the author decided to implement the transposition separately, probably to keep the code cleaner by avoiding branches inside the loops. In halide-haskell it’s actually the other way around: we prefer to keep the code functional and minimize updates without worrying too much about branches. We hope that Halide does a good job optimizing them. And this is indeed the case, as we shall see later.

Halide does not support recursive functions, so we will have to do the recursion in pure Haskell. Let’s implement a function that computes the next column using the previous two:

nextColumn :: Expr Int32 -> Parameter 2 Double -> IO (Function 2 Double)

nextColumn n b' =

k <- mkVar "k"

_m <- mkVar "m"

b <- constantExterior 0 b'

let upper =

cast (2 * (n + k) `mod` 2 - 1)

* (2 * cast k + 1) / (2 * cast n + 3)

* b ! (n + 1, k)

let lower =

-(2 * cast n + 1) / (2 * cast k + 3) * b ! (k + 1, n)

+ (2 * cast n + 1) / (2 * cast k - 1) * b ! (k - 1, n)

+ b ! (k, n - 1)

define "next" (k, _m) $ ifThenElse (k `lt` n) upper lowerWe receive the current index n and the previous state of the buffer b' and

compute the n + 1st column. We can now implement the full function for computing b:

spectralConvolutionMatrix ::

IO (Double -> Ptr (HalideBuffer 1 Double) -> Ptr (HalideBuffer 2 Double) -> IO ())

spectralConvolutionMatrix = do

mkColumn0 <- compile $ ...

mkColumn1 <- compile $ ...

mkNext <- compile nextColumn

let convolution !s !a !b = do

size <- getBufferExtent b 1

withCropped b 1 0 1 $ mkColumn0 s a

withCropped b 1 1 1 $ mkColumn1 s b

let go !n

| n < size = do

withCropped b 1 n 1 $ mkNext (fromIntegral (n - 1)) b

go (n + 1)

| otherwise = pure ()

go 2

pure convolutionThe code may look a bit scary, but it’s mainly due to the fact that we have to

use the withCropped function for selecting specific columns. Its type signature is:

withCropped

:: Ptr (HalideBuffer n a) -- ^ buffer to slice

-> Int -- ^ dimension along which to slice

-> Int -- ^ start index

-> Int -- ^ extent

-> (Ptr (HalideBuffer n a) -> IO b) -- ^ what to to with the cropped buffer

-> IO bWe use it to select specific columns from the array b. Normally, an array library such as Massiv or ArrayFire will provide such functions, but for this simple example we’ve decided to avoid relying on one.

Since we had to implement the recursion manually outside of halide-haskell, we’re losing a bit in clarity compared to the Numba implementation above. Let’s see whether the performance makes up for the extra lines of code.

On the Python side, we use the

timeit module to measure how

long the kernel takes. And on the Haskell side we use the

timestats library. On a

laptop with an 8-core Intel i5-8265U, the results are:

- Numba: ~9 ms per evaluation;

- halide-haskell: ~1.1 ms per evaluation;

Hm… the Haskell code is faster, but can we make it even faster? The answer is yes, and this is one of the great things about Halide — it let’s us play around with the optimizations without breaking our algorithm.

We’re processing the image column-by-column, and our computation is quite short and definitely memory bound, so it’s unlikely that we’ll see any benefit in using multiple threads. But let’s give it a try anyway. The only thing we have to change is:

- define "next" (k, _m) $ ifThenElse (k `lt` n) upper lower

+ f <- define "next" (k, _m) $ ifThenElse (k `lt` n) upper lower

+ parallel k fSo now we’re giving our defined function a name and then telling Halide to

parallelize the loop over k. And the performance degrades to ~10 ms per

evaluation, as expected.

Another optimization we can try is vectorization:

- define "next" (k, _m) $ ifThenElse (k `lt` n) upper lower

+ f <- define "next" (k, _m) $ ifThenElse (k `lt` n) upper lower

+ ki <- mkVar "ki"

+ split TailShiftInwards k (k, ki) 8 f >>= vectorize kiWe tell Halide to split the loop over k into two nested loops where the inner

one, ki, runs from 0 to 7. And then, since we know the extents of the inner

loop, we tell Halide to use SIMD intrinsics to implement it. And the timings are

improved to 0.5 ms per evaluation. So the speedup is around 18x, even though

we had to do the recursion outside of halide-haskell and are actually invoking

the Halide kernels hundreds of times. Hopefully, such performance improvement

compensates for the slightly longer code.

Halide also supports so-called autoschedulers that can be used to produce a reasonable initial schedule. We also support autoschedulers in halide-haskell, but the interface is somewhat experimental. We refer the user to Halide’s tutorial on autoscheduling for an introduction to the topic.

Conclusion

This work has been kindly supported by Tweag through the Tweag Open Source Fellowship program, and we now have a working Haskell frontend for Halide. The library is still in alpha stage, but it can already compile and run kernels on CPUs and GPUs, and the performance is competitive with other libraries such as Python’s Numba.

halide-haskell brings us one step closer to being able to apply Haskell in the numerical computing domain. The next steps include writing more “halide-*” packages for better interoperability with the rest of the library ecosystem, further experimentation with GPUs, and of course using halide-haskell in real world research projects. Stay tuned!

- Even though for such a simple function, GHC is probably able to inline everything and avoid heap allocations, the generated code is still suboptimal. Discussing the reasons for it is outside the scope of this blog post, but compare the assembly code that we get from GHC and from GCC.↩

- For example, there are more than 3,500 of so called “native” functions in PyTorch (see native_functions.yaml), and this count does not even include specializations for different device and data types.↩

- Examples of JIT compilation in the numerical computing industry include: the Julia programming language, the Numba library, PyTorch (since version 2.0) and JAX (via XLA) deep learning frameworks.↩

- Users have to rely on Accelerate to generate high-performance kernels and have no way to force some low-level optimizations. For example, Trevor L. McDonell et al. explain that the reason why the hand-written CUDA implementation of the N-body problem outperforms Accelerate is the use of on-chip shared memory. Another example would be the matrix-matrix product where achieving maximal performance requires writing no fewer than six nested loops instead of the naive three. Accelerate has no way of knowing that such optimizations have to be applied and cannot perform them automatically.↩

- We are relying on the DataKinds extension to lift values to the type level.↩

Behind the scenes

If you enjoyed this article, you might be interested in joining the Tweag team.