Evaluation of Retrieval-Augmented Generation (RAG) systems is paramount for any industry-quality usage. Without proper evaluation we end up in the world of “it works on my machine”. In the realm of AI, this would be called “it works on my questions”.

Whether you are an engineer seeking to refine your RAG systems, are just intrigued by the nuances of RAG evaluation or are eager to read more after the first part of the series (Evaluating retrieval in RAGs: a gentle introduction) — you are in the right place.

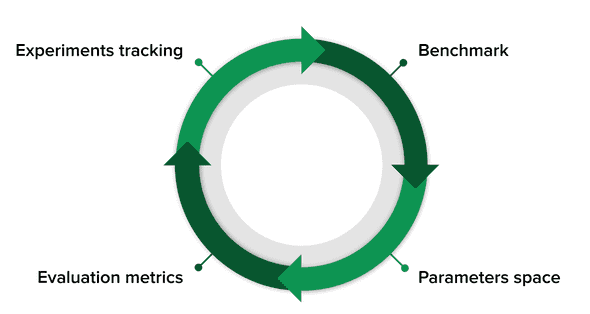

This article equips you with the knowledge needed to navigate evaluation in RAGs and the framework to systematically compare and contrast existing evaluation libraries. This framework covers benchmark creation, evaluation metrics, parameter space and experiment tracking.

An experimental framework to evaluate RAG’s retrieval

Inspired by reliability engineering, we treat RAG as a system that may experience a failure of different parts. When the retrieval part is not working well, there is no context to give to its LLM component, thus no meaningful response: garbage in, garbage out.

Improving retrieval performance may be approached like a classic machine learning optimization by searching the state space of available parameters and selecting the ones that best fit an evaluation criteria. This approach can be classified under the umbrella of Evaluation Driven Development (EDD) and requires:

- Creating a benchmark

- Defining the parameter space

- Defining evaluation metrics

- Tracking experiments and results

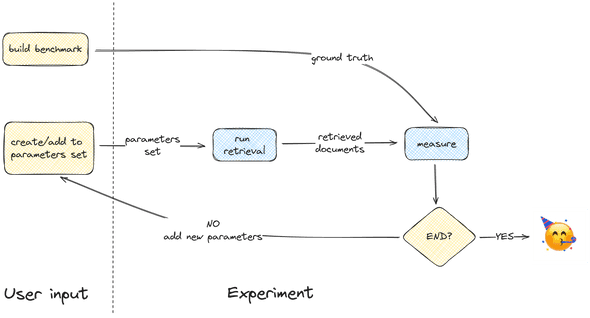

Figure 2, below, provides a detailed view of the development loop governing the evaluation process:

- The part on the left depicts user input (benchmarks and parameters).

- The retrieval process on the right includes requests to the vector database, but also changes to the database itself: a new embedding model means a new representation of the documents in the vector database.

- The final step involves evaluating retrieved documents using a set of evaluation metrics.

This loop is repeated until the evaluation metrics meet an acceptance criteria.

In the following sections we will cover the different components of the evaluation framework in more detail.

Building a benchmark

Building a benchmark is the first step towards a repeatable experimental framework. While it should contain at least a list of questions, the exact form of the benchmark depends on which evaluation metrics will be used and may consist in a list of any of the following:

- Questions

- Pairs of

(question, answer) - Pairs of

(question, relevant_documents)

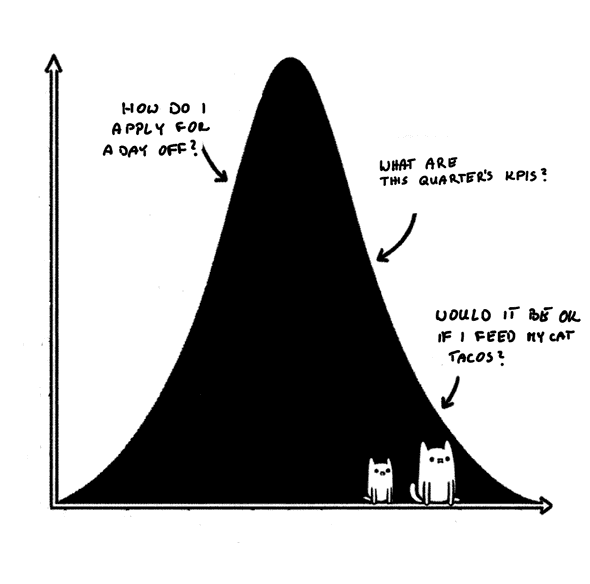

Building a representative benchmark

Like collecting requirements for a product, we need to understand how the chatbot users are going to use the RAG system and what kind of questions they are going to ask. Therefore, it’s important to involve someone familiar with the knowledge base to assist in compiling the questions and identifying necessary resources. The collected questions should represent the user’s experience. A statistician would say that a benchmark should be a representative sample of questions. This allows to correctly measure the quality of the retrieval. For example, if you have an internal company handbook, you will most likely ask questions about the company goals or how some internal processes work and probably not ask about the dietary requirements of a cat (see Figure 3).

Benchmark generation

Benchmark datasets can be collected through the following methods:

- Human-created: A human creates a list of questions based on their knowledge of the documents base.

- LLM-generated: Questions (and sometimes answers) are generated by an LLM using documents from the database.

- Combined human and LLM: Human-provided benchmark questions are augmented with questions reformulated by LLMs.

The hard part in collecting a benchmark dataset is obtaining representative and varied questions. Human-generated benchmarks will have questions typically asked to the tool, but the volume of questions will be low. On the other hand, machine-generated benchmarks may be larger in scale but may not accurately reflect real user behavior.

Manually-created benchmarks

In the experiments we ran at Tweag, we used a definition of a benchmark where you not only have questions but you also have the expected output. This makes the benchmark a labeled dataset (more details on that in an upcoming blog post). Note here, that we do not give direct answers to the benchmark questions but we provide instead relevant documents, for example a list of web pages URLs containing the relevant information for certain questions. This formulation allows us to use classical ML measures like precision and recall. This is not the case for other benchmark creation options, which need to be evaluated with LLM-based evaluation metrics (discussed further in the corresponding section).

Here’s an example of the (question, relevant_documents) option:

("What is a BUILD file?", ["https://bazel.build/foo", "https://bazel.build/bar"])Automating benchmark creation

It is possible to automate the creation of questions. Indeed MLflow, LlamaIndex and Ragas allow you to use LLMs to create questions of your documents base. Unlike questions created by humans, whether specifically for the benchmark or obtained from users, which result in smaller benchmarks, automating allows for scaling and larger benchmarks. LLM-generated questions lack the complexity of human questions, however, and are typically based on a single document. Moreover, they do not represent the typical usage over the documents base (after all, not all questions are created equal) and classical ML measures are not directly applicable.

Reformulating questions with LLMs

Another way to artificially augment a benchmark consists of reformulating questions with LLMs. While this does not increase coverage over documents, it allows for a wider evaluation of the system. Note that if the benchmark associates answers or relevant documents to each question, these should be the same for reformulated questions.

A RAG-specific data model

What is the parameter search space for the best-performing retrieval?

A subset of the search space parameters is connected with the way documents are represented in the vector database:1

- The embedding model and its parameters.

- The chunking method, for example

RecursiveCharacterTextSplitterand the parameters of this chunking model, likechunk_size.

Another subset of the search space parameters is connected to how we search the database and preprocess the data:

-

The

top_kparameter, representing topkmatching results. -

The

preprocessing_model, a function that takes a query sent by the RAG user and cleans it up before performing search on the vector database. The preprocessing function is useful for queries like:Please give me a table of Modus departments ordered by the number of employees.

Where it is better for the query sent to the vector database to contain:

Modus departments with number of employees

as the “table” part of the query is about formatting the resulting output and not the semantic search.

The example below shows a JSON representation of the retrieval configuration:

"retrieval": {

"collection_name": "default",

"embedding_model": {

"name": "langchain.embeddings.SentenceTransformerEmbeddings",

"parameters": { "model_name": "all-mpnet-base-v2" }

},

"chunking_model": {

"name": "langchain.text_splitter.RecursiveCharacterTextSplitter",

"parameters": { "chunk_size": 500, "chunk_overlap": 5 }

},

"top_k": 10,

"preprocessing_model": {

"name": ""

}

},

Evaluation metrics

The first set of evaluation metrics we would like to present has roots in the well-established field of Information Retrieval. Given a set of documents retrieved from the vector database and a ground truth of documents that should have been retrieved, we can compute information retrieval measures, including but not limited to:

For more details and a discussion of other RAG-specific evaluation metrics, including those computed with the help of LLMs, have a look at our first blog post in the RAG series.

The measure you choose should best fit your evaluation objective. For example, it may be the mean value of recall computed over the questions in the ground truth dataset.

Experiment tracking

What information about the experiment do we need to track to make it reproducible? Retrieval parameters, for sure! But this is not enough. The choice of the vector database, the benchmark data, the version of the code you use to run your experiment, among others, all have a say in the results.

If you’ve done some MLOps before, you can see that this is not a new problem. And fortunately, frameworks for data and machine learning like MLflow and DVC as well as version controlled code make tracking and reproducing experiments possible.

MLFlow allows for experiment tracking, including logging parameters, saving results as artifacts, and logging computed metrics, which can be useful for comparing different runs and models.

DVC (Data Version Control) can be used to keep track of the input data model parameters and of databases. Combined with git it allows for “time travelling” to a different version of the experiment.

We also used ChromaDB as a vector database. The “collections” feature is particularly useful to manage different vector representations (chunking and embedding) of text data in the same database.

Note that a good best practice is also to save the retrieved references (for example in a JSON file), to make it easy for inspection and sharing.

Limitations

Similar to training a classical ML model, the evaluation framework outlined in this post carries the risk of overfitting, where you adjust your model’s parameters based solely on the training set. An intuitive solution is to divide the dataset into training and testing subsets. However, this isn’t always feasible. Human-generated datasets tend to be small, as human resources do not scale efficiently. This problem might be alleviated by using LLM-assisted generation of a benchmark.

Summary

In this blog post, we proposed an alternative to the problematic “eye-balling” approach to RAG evaluation: a systematic and quantitative retrieval evaluation framework.

We demonstrated how to construct it, beginning with the crucial step of building a benchmark dataset representative of real-world user queries. We also introduced a RAG-specific data model and evaluation metrics to define and measure different states of the RAG system.

This evaluation framework integrates the broader concepts from methodologies and best practices of Machine Learning, Software Development and Information Retrieval.

Leveraging this experimental framework with appropriate tools allows practitioners to enhance the reliability and effectiveness of RAGs, an essential pre-requisite for production-ready use.

Thanks to Simeon Carstens and Alois Cochard for their reviews of this article.

- An implicit assumption here is that semantic search and a vector database are in use, but the data model may be generalized to use keyword search as well.↩

Behind the scenes

Maria, a mathematician turned Senior Data Engineer, excels at blending her analytical prowess and software development skills within the tech industry. Her role at Tweag is twofold: she is not only a key contributor to the innovative AI projects but also heavily involved in data engineering aspects, such as building robust data pipelines and ensuring data integrity. This skill set was honed through her transition from academic research in numerical modelling of turbulence to the realm of software development and data science.

Nour is a data scientist/engineer that recently made the leap of faith from Academia to Industry. She has worked on Machine Learning, Data Science and Data Engineering problems in various domains. She has a PhD in Computer Science and currently lives in Paris, where she stubbornly tries to get her native Lebanese plants to live on her tiny Parisian balcony.

If you enjoyed this article, you might be interested in joining the Tweag team.