Time flies, and while it occasionally drops dead from the sky on Fridays or Monday mornings,

that definitely was not the case during an internal two-day hackathon Tweag’s GenAI group

held in February.

As one of our projects, we wanted to develop a tool that would tell us where exactly time

flew to in a given period of time, and so was born work-dAIgest: a Python tool that uses your

standard workplace tools (Google Calendar, GitHub, Jira, Confluence, Slack, …) and a large

language model (LLM) to summarize what you’ve accomplished.

In this blog post, we’ll present a proof-of-concept of work-dAIgest, show you

what’s under the hood and finally touch quickly on a couple lessons we learned during this

short, but fun project.

Overview

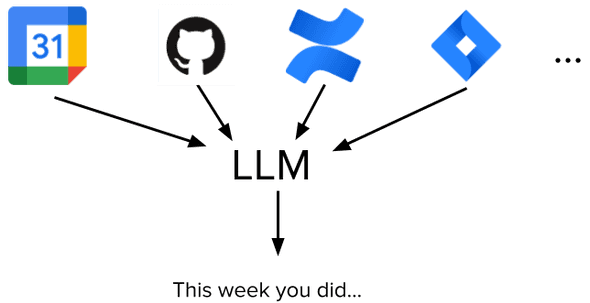

The following diagram illustrates the general idea:

We want to get work-related data from multiple sources (your agenda, GitHub, Jira, Confluence, Slack, emails, …) and ask a LLM to summarize the information about your work these data contain.

As experienced data engineers and scientists, we knew that in almost any data or AI project, most of the time is spent on data retrieval, cleaning and preprocessing. With that in mind, and only two days to build a first version, we restricted ourselves to a narrower scope of two data sources that nevertheless cover different work responsibilities:

- GitHub issues / PRs / commits: to reflect daily work of a developer

- Google Calendar meetings / events: to reflect daily work of a manager

We decided to rely on the GitHub’s Search API to extract

commits

and

pull requests / issues.

For calendar data, we chose to extract .ics files manually, as it is easier and quicker to handle than the Google Calendar API.

Data preprocessing

Calendar data

Calendar data often comes in ICS (Internet Calendar and Sharing) format, and we resorted to

the ics Python library to parse it.

That way, for each event, we created a string with the following lines:

<event name>

duration: <event duration>

description: <event description>

attendees: <attendee name 1> - <attendee name 2> - ...

-------------------We then placed the concatenation of these strings in the LLM prompt.

GitHub data

Information about commits, issues and pull requests could be extracted via GitHub’s Search API.

We had to be careful to pass the right date time formatting (YYYY-MM-DDTHH:MM:SS / ISO8601) from the user input to the search string.

Once we had the search results, we could output them as the JSON of a list of objects, each with the following fields:

date(issue / PR comment or commit date),text(issue / PR text or commit message),repository(repository name),action(eithercreated,updated, orclosed).

This JSON was then included as-is in the LLM prompt.

Large language model

The LLM was at the heart of this project, and with it came two important questions: What LLM to use and how to design the prompt.

LLM choice

While the choice of LLMs out there was becoming overwhelming, we could not just use any LLM. Instead, we had multiple requirements:

- it should be sufficiently powerful,

- it must be appropriate to use with confidential data (calendar events, data from private GitHub repositories), lest it leaks into later versions of the LLM,

- it should be readily available to use,

- it should be cheap for one-off use.

These constraints led us to decide to initially implement support for Llama 2 70B and

Jurassic-2 as deployed on Amazon Bedrock.

Claude-3 models became available in Bedrock a couple weeks after the first work-dAIgest

prototype was finished, and we added support for it straight away.

Bedrock assured us that our data would stay private. It was easy to use via simple

AWS Python API calls and its pay-on-demand model per token was very cost-efficient.

Prompt engineering

The first draft of our prompt was very simple yet already worked rather well. It took just a few not-too-difficult clarifications to get an output that was more or less correct and in the format and style we wanted. Finally, we had to add some “conversational context” to the prompt, the first and last line. The final prompt then was

Human:

Summarize the events in the calendar and my work on GitHub and tell me what I did during the covered period of time.

Please mention the covered period of time ({lower_date} - {upper_date}) in your answer.

If the event has a description, include a summary.

Include attendees names.

If the event is lunch, do not include it.

For GitHub issues / pull requests / commits, don't include the full text / description / commit message,

but summarize it if it is longer than two sentences.

Calendar events:

```

{calendar_data}

```

These are GitHub issues, pull requests and commits I worked on, in a JSON format:

```

{github_data}

```

AI:Example

After all these details, you surely want to see the result of a work-dAIgest run!

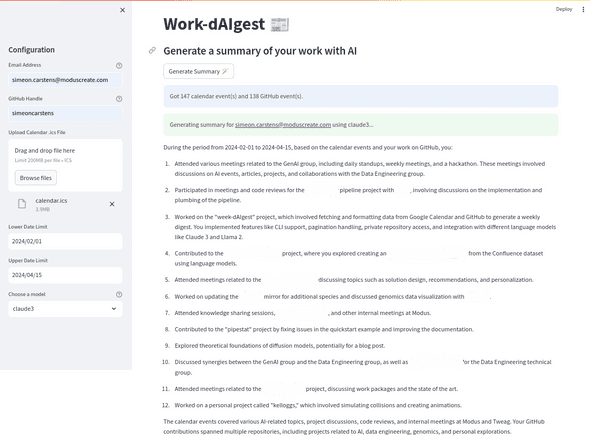

You shall not be disappointed - work-dAIgest can be used either from the command line,

or via a web application, and the latter is shown in the following screenshot:

Note that tiny changes to the prompt sometimes resulted in wildly different summaries and deterioration of quality. But some prompt “settings” are also a matter of taste: if you decide to try out the tool yourself, feel free to play around with the prompt to adapt the output style to your liking!

Lessons learned

Building an application with such tight time constraints is an occasion to observe and learn about our everyday work as developers and engineers as a whole.

-

LLMs allow fast prototyping of NLP applications

It was surprisingly easy to create a usable application in very little time, and not just any application - this would have been very hard or almost impossible to do just a couple years back when LLMs were not a thing yet. This also testifies to how easy and affordable it has become to use LLMs in your own projects: Tools like AWS Bedrock put powerful models at your disposal without forcing you to maintain and pay your own deployment - just pay as you go, which opens up countless opportunities for personal and one-off applications.

-

Data processing challenges remain unchanged

With all the buzz about how LLMs help developers and engineers, some things stay the same: working with data is hard, and no LLM tells you which data to include or exclude or how to preprocess your data so it fits your specific use case. Even for small projects, data science and engineering is still something you have to do yourself, and we doubt that this will change any time soon.

Conclusion

Building work-dAIgest in a two-days internal hackathon was a great experience.

While it is still a proof-of-concept, it is very much usable and we hope to improve it in the future, mostly by including more sources of data.

If you want to try out work-dAIgest yourself, or contribute to it, don’t hesitate to check out the work-dAIgest GitHub repository!

Also, stay tuned for blog posts describing our other GenAI projects!

Behind the scenes

Simeon is a theoretical physicist who has undergone several transformations. A journey through Markov chain Monte Carlo methods, computational structural biology, a wet lab and renowned research institutions in Germany, Paris and New York finally led him back to Paris and to Tweag, where he is currently leading projects for a major pharma company. During pre-meeting smalltalk, ask him about rock climbing, Argentinian tango or playing the piano!

Nour is a data scientist/engineer that recently made the leap of faith from Academia to Industry. She has worked on Machine Learning, Data Science and Data Engineering problems in various domains. She has a PhD in Computer Science and currently lives in Paris, where she stubbornly tries to get her native Lebanese plants to live on her tiny Parisian balcony.

If you enjoyed this article, you might be interested in joining the Tweag team.