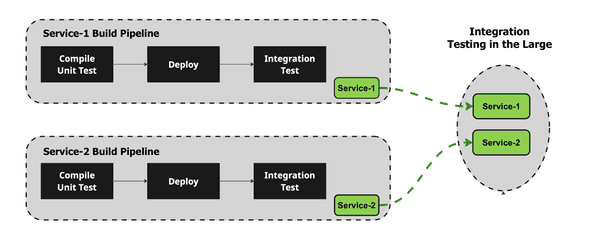

Integration testing, a crucial phase of the software development life cycle, plays a pivotal role in ensuring that individual components of a system work seamlessly when combined. Other than unit tests at an atomic level, every thing in the software development process is a kind of integration of pieces. This can be integration-in-the-small, like the integration of components, or it can be integration-in-the-large, such as the integration of services / APIs. While integration testing is essential, it is not without its challenges. In this blog post, we’ll explore the issues of speed, reliability, and maintenance that often plague integration testing processes.

Speed

Integration testing involves testing the interactions between different components of a system. Testing a feature might require integrating different versions of the dependent components. As the complexity of software grows, so does the number of interactions that need to be tested. This increase in interactions can significantly slow down the testing process. With modern applications becoming more intricate, the time taken for integration tests can become a bottleneck, hindering the overall development speed.

Automating integration tests can be slow and expensive because:

- Dependent parts must be deployed to test environments.

- All dependent parts must have the correct version.

- The CI must run all previous tasks; such as static checks, unit tests, reviews and deployment.

Only then can we run the integration test suite. These steps take time; for example, calling an endpoint on a microservice requires HTTP calls, making the integration testing slow. Any issues arising during the integration testing require repeating all the steps again with development effort to fix the issues, also turning it into an expensive process. The later the feedback about an issue, the more expensive the fix becomes.

These difficulties can be partially addressed with the following strategies:

- Employ parallel testing: Running tests concurrently can significantly reduce the time taken for integration testing.

- Prioritize tests: Identify critical integration points and focus testing efforts on these areas to ensure faster feedback on essential functionalities. Prioritization should always be done, but we must be careful not to miss necessary test cases. Furthermore, reporting issues against non-essential parts can generate too much noise, which can reduce the overall quality of the testing. So there is a balance to be struck.

But we nevertheless have to wait for all upstream processes to finish before, as a final step, the prioritized / parallelized integration tests can be run.

Reliability

The reliability of integration tests is a common concern. Flaky tests, which produce inconsistent results, can erode the confidence in the testing process. Flakiness can stem from various sources such as external dependencies, race conditions, or improper test design. Unreliable tests can lead to false positives and negatives, making it challenging to identify genuine issues.

To improve reliability, two things are essential:

-

Test isolation: Try to minimize external dependencies and isolate integration tests to ensure they are self-contained and less susceptible to external factors.

How does that help? Well, dependencies are the required parts that should be ready and integrated to the environments. We can reduce the dependencies by mocking, but we still need to check the integration of that mocked part of the system. However, challenges arise when using mocks for testing, as they may not always accurately replicate the behavior of the real dependencies. This can lead to false positives during testing, where the system appears to be functioning correctly with mocks in place, but may encounter errors when the real dependencies are introduced.

-

Regular maintenance: continuously update and refactor tests to ensure they remain reliable as the codebase evolves. For that, checks should be done every time there is a requirement update or test failure. However, after the tests were created, the integration testing is isolated from the business logic (most often, it is driven by the QA team). In the best case, updates to integration tests will be done when failures occur in CI. But that’s very late: integration test development lags behind the component code development. This can produce false negative results, which reduces the reliability of the tests.

Maintenance

We just discussed how regular test maintenance helps reliability, but maintaining integration tests can be cumbersome, especially in agile environments where the codebase undergoes frequent changes. As the software evolves, integration points may shift, leading to outdated or irrelevant tests. Outdated tests can provide false assurances, leading to potential issues slipping through the cracks.

The following strategies can help reducing maintenance effort:

-

Automation: Automate the integration testing process as much as possible to quickly detect issues when new code is introduced. This means we have to automate not only the tests, but also the process. But often, the integration testing process is not an integral part of the development process, which can lead to divergence between development and testing environments.

To bridge this gap, Infrastructure as Code (IAC) tools can play a crucial role by injecting dummy data to create dedicated test instances. Tools like Terraform, Ansible, and CloudFormation can be used to define and provision test environments with realistic dummy data. These tools can automate the creation of test databases, user accounts, and other resources needed for testing, ensuring that the test environment closely mirrors production.

-

Version control: Store integration test cases alongside the codebase in version control systems, ensuring that tests are updated alongside the code changes. This can help protect the main branch. But the test suite is often developed independently from services, in which case the version of the code that should be under test (the service code) is not aligned with the testing code. Versioning thus provides benefits for managing the test development, but doesn’t increase overall production quality.

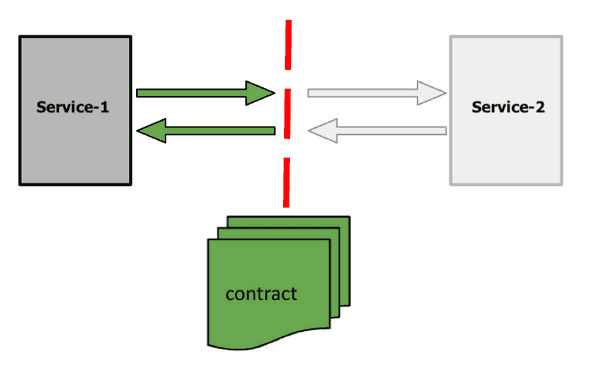

Breaking it up: contract testing

However, despite its importance, integration testing can be time-consuming and resource-intensive, particularly in large, complex systems. Because of these reasons, it is time to think about contract testing as a complementary approach to integration testing; especially integration-in-the-small. Contract testing offers a solution to the challenges of integration testing by allowing teams to define and verify the contracts or agreements between services, ensuring that each component behaves as expected. By focusing on the interactions between services rather than the entire system, contract testing enables faster and more reliable testing, reducing the complexity and maintenance burden associated with traditional integration testing.

As an example, let’s assume that there is an interaction between the two services. Service-1 is expecting a response while Service-2 is expecting to be called correctly. This relation is defined in a document called a contract. The contract can be checked in isolation with a mock server and is a live document. Whenenever there are requirement updates for Service-2, the contract will be updated and Service-1 will get the required version of the contract. Service-1 then checks its response defined in the contract against the mock server.

A simple contract between Service-1 and Service-2 looks roughly like this:

{

"consumer": {

"name": "Service-1"

},

"provider": {

"name": "Service-2"

},

"interactions": [

{

"request": {

< description of HTTP request: method, route, headers, body >

},

"response": {

< description of HTTP response: status code, headers, body >

}

}

],

"metadata": {

< contract version >

}

}Conclusion

While integration testing is crucial for identifying issues that arise when different components interact, it is essential to be aware of and address the challenges related to speed, reliability, and maintenance. By employing the right strategies and tools, development teams can mitigate these challenges and ensure that integration testing remains effective, efficient, and reliable throughout the software development process.

While contract testing can significantly improve the testing process, it is essential to note that integration testing is still necessary for large, complex systems where multiple components interact in complex ways. In these cases, integration testing provides a critical layer of validation, ensuring that the entire system functions as intended. By combining contract testing with integration testing, development teams can ensure that their systems are thoroughly tested, reliable, and efficient.

Behind the scenes

Mesut began his career as a software developer. He became interested in test automation and DevOps from 2010 onwards, with the goal of automating the development process. He's passionate about open-source tools and has spent many years providing test automation consultancy services and speaking at events. He has a bachelor's degree in Electrical Engineering and a master's degree in Quantitative Science.

If you enjoyed this article, you might be interested in joining the Tweag team.