Retrieval-augmented generation (RAG) is about providing large language models with extra context to help them produce more informative responses. Like any machine learning application, your RAG app needs to be monitored and evaluated to ensure that it continues to respond accurately to user queries. Fortunately, the RAG ecosystem has developed to the point where you can evaluate your system in just a handful of lines of code. The outputs of these evaluations are easily interpretable: numbers between 0 and 1, where higher numbers are better. Just copy our sample code below, paste it into your continuous monitoring system, and you’ll be looking at nice dashboards in no time. So that’s it, right?

Well, not quite. There are several common pitfalls in RAG evaluation. From this blog post, you will learn what the metrics mean and how to check that they’re working correctly on your data with our field-gained knowledge. As they say, “forewarned is forearmed”!

Background

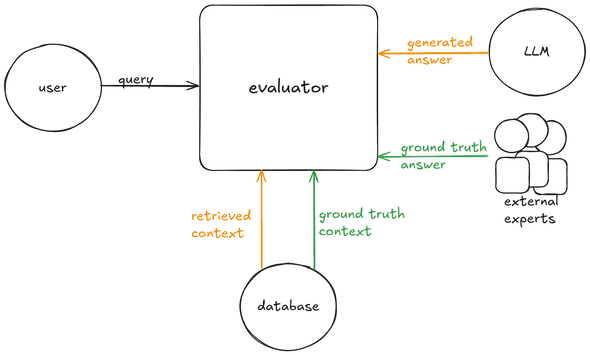

If you’re new to RAG evaluation, our previous posts about it give an introduction to evaluation and discuss benchmark suites. For now, you just need to know that a benchmark suite consists of a collection of questions or prompts, and for each question establishes:

- a “ground truth” context, consisting of documents from our database that are relevant for answering the question; and

- a “ground truth” answer to the question.

For example

| Query | Ground truth context | Ground truth answer |

|---|---|---|

| What is the capital of France? | Paris, the capital of France, is known for its delicious croissants. | Paris |

| Where are the best croissants? | Lune Croissanterie, in Melbourne, Australia, has been touted as ‘the best croissant in the world.’ | Melbourne |

Then the RAG system provides (for each question):

- a “retrieved” context — the documents that our RAG system thought were relevant — and

- a generated answer.

Example

Here’s an example that uses the Ragas library to evaluate the “faithfulness” (how well the response was supported by the context) of a single RAG output, using an LLM from AWS Bedrock:

from langchain_aws import ChatBedrockConverse

from ragas import EvaluationDataset, evaluate

from ragas.llms import LangchainLLMWrapper

from ragas.metrics import Faithfulness

# In real life, this probably gets loaded from an internal file (and hopefully

# has more than one element!)

eval_dataset = EvaluationDataset.from_list([{

"user_input": "What is the capital of France?",

"retrieved_contexts": ["Berlin is the capital of Germany."],

"response": "I don't know.",

}])

# The LLM to use for computing metrics (more on this below).

model = "anthropic.claude-3-haiku-20240307-v1:0"

evaluator = LangchainLLMWrapper(ChatBedrockConverse(model=model))

print(evaluate(dataset=eval_dataset, metrics=[Faithfulness(llm=evaluator)]))If you paid close attention in the previous section, you’ll have noticed that our evaluation dataset doesn’t include all of the components we talked about. That’s because the “faithfulness” metric only requires the retrieved context and the generated answer.

RAG evaluation metrics

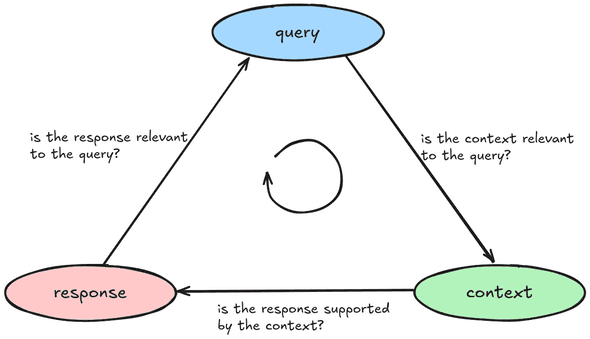

There are a variety of RAG evaluation metrics available; to keep them straight, we like to use the RAG Triad, a helpful system of categorizing some RAG metrics. A RAG system has one input (the query) and two outputs (the context and the response), and the RAG Triad lets us visualize the three interactions that need to be evaluated.

Evaluating retrieval

Feeding an LLM with accurate and relevant context can help it respond well; that’s the whole idea of RAG. Your system needs to find that relevant context, and your evaluation system needs to figure out how well the retrieval is working. This is the top-right side of the RAG Triad: evaluating the relationship between the query and the retrieved context. The two main retrieval metrics are precision and recall; each one has a classical definition, plus an “LLM-enhanced” definition for RAG. Roughly, “good precision” means that we don’t return irrelevant information, while “good recall” means that we don’t miss any relevant information. Let’s say that each of our benchmark queries is labelled with a ground truth set of relevant documents, so that we can check how many of the retrieved documents are relevant.

Then the classical precision and recall are

These metrics are well-established, useful, and easy to compute. But in a RAG system, the database might be large, uncurated, and contain redundant documents. For example, suppose you have ten related documents, each containing an answer to the query. If your retrieval system returns just one of them then it will have done its job adequately, but it will only receive a 10% recall score. With a large database, it’s also possible that there’s a document with the necessary context that wasn’t tagged as relevant by the benchmark builder. If the retrieval system finds that document, it will be penalized in the precision score even though the document is relevant.

Because of these issues with classical precision and recall, RAG evaluations often adapt them to work on statements instead of documents. We list the statements in the ground-truth context and in the retrieved context; we call a retrieved statement “relevant” if it was present in the ground-truth context.

This definition of precision and recall is better tailored to RAG than the classical one, but it comes with a big disadvantage: you need to decide what a “statement” is, and whether two statements are “the same.” Usually you’ll want to automate this decision with an LLM, but that raises its own issues with cost and reliability. We’ll say more about that later.

Evaluating generation

Once your retrieval is working well — with continuous monitoring and evaluation, of course — you’ll need to evaluate your generation step. The most commonly used metric here is faithfulness1, which measures whether a generated answer is factually supported by the retrieved context; this is the bottom side of the RAG Triad. To calculate faithfulness, we count the number of factual claims in the generated answer, and then decide which of them is supported by the context. Then we define

Like the RAG-adapted versions of context precision and recall, this is a statement-based metric. To automate it, we’d need an LLM to count the factual claims and decide which of them is context-supported.

You can evaluate faithfulness without having retrieval working yet, as long as you have a benchmark with ground truth contexts. But if you do that, there’s one crucial point to keep in mind: you also need to test generation when retrieval is bad, like when it contains distracting irrelevant documents or just doesn’t have anything useful at all. Bad retrieval will definitely happen in the wild, and so you need to ensure that your generation (and your generation evaluation) will degrade gracefully. More on that below.

Evaluating the answer

Finally, there is a family of commonly-used generation metrics that evaluate the quality of the answer by comparing it to the prompt and the ground truth:

- answer semantic similarity measures the semantic similarity between the generated answer and the ground truth;

- answer correctness also compares the generated answer and the ground truth, but is based on counting factual claims instead of semantic similarity; and

- answer relevance measures how well the generated answer corresponds to the question that the prompt asked. This is the top-left side of the RAG Triad.

These metrics directly get to the key outcome of your RAG system: are the generated responses good? They come with the usual pluses and minuses of end-to-end metrics. On the one hand, they measure exactly what you care about; on the other hand, when they fail you don’t know which component is to blame.

As you’ve seen above, many of the metrics used for evaluating RAG rely on LLMs to extract and evaluate factual claims. That means that some of the same challenges you’ll face while building your RAG system also apply to its evaluation:

- You’ll need to decide which model (or models) to use for evaluation, taking into account cost, accuracy, and reliability.

- You’ll need to sanity-check the evaluator’s responses, preferably with continuous monitoring and occasional manual checks.

- Because the field is moving so quickly, you’ll need to evaluate the options yourself — any benchmarks you read online have a good chance of being obsolete by the time you read them.

When the judges don’t agree

In order to better understand these issues, we ran a few experiments on a basic RAG system — without query re-writing, context re-ranking or other tools to improve retrieval — using the Neural Bridge benchmark dataset as our test set. We first ran these experiments in early 2024; when we re-visited them in December 2024 we found that newer base LLMs had improved results somewhat but not dramatically.

The Neural Bridge dataset contains 12,000 questions; each one comes with a context and an answer. We selected 200 of these questions at random and ran them through a basic RAG system using Chroma DB as the vector store and either Llama 2 or Claude Haiku 3 as the LLM for early 2024 and December 2024 runs, respectively. The RAG system was not highly tuned — for example, its retrieval step was just a vector similarity search — and so it gave a mix of good answers, bad answers, and answers saying essentially “I don’t know: the context doesn’t say.” Finally, we used Ragas to evaluate various metrics on the generated responses, while varying the LLMs used to power the metrics.

Experimental results

Our goal in these experiments was to determine:

- whether the LLM evaluators were correct, and

- whether they were consistent with one another.

We found that different LLMs are often not in agreement. In particular, they can’t all be correct.

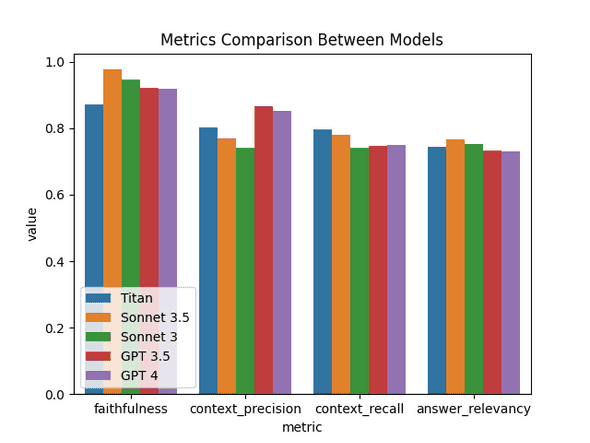

Here are the evaluation scores of five different models on four different metrics, averaged across our benchmark dataset. You’ll notice a fair amount of spread in the scores for faithfulness and context precision.

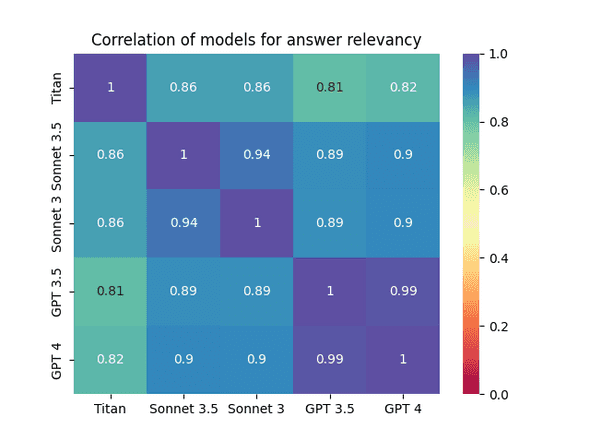

But the scores above are just averages across the dataset — they don’t tell us how well the LLMs agreed on individual ratings. For that, we checked the correlation between model scores and again found some discrepancies between models. Here are the results for answer relevancy scores: the correlations show that even though the different models gave very similar average scores, they aren’t in full agreement.

It might not be too surprising that models from the same family (GPT 3.5 and 4, and Sonnet 3 and 3.5) had larger overlaps than models from different families. If your budget allows it, choosing multiple uncorrelated models and evaluating with all of them might make your evaluation more robust.

When faithfulness gets difficult

We dug a little more into the specific reasons for LLM disagreement, and found something interesting about the faithfulness score: we restricted to the subset of questions for which retrieval was particularly bad, having no overlap with the ground truth data. Even the definition of faithfulness is tricky when the context is bad. Let’s say the LLM decides that the context doesn’t have relevant information and so responds “I don’t know” or “The context doesn’t say.” Are those factual statements? If so, are they supported by the context? If not, then according to the definition, the faithfulness is zero divided by zero. Alternatively, you could try to detect responses like this and treat them as a sort of meta-response that doesn’t go through the normal metrics pipeline. We’re not sure how best to handle this corner case, but we do know that you need to do it explicitly and consistently. You also need to be prepared to handle null values and empty responses from your metrics pipeline, because this situation often induces them.

Experimental results

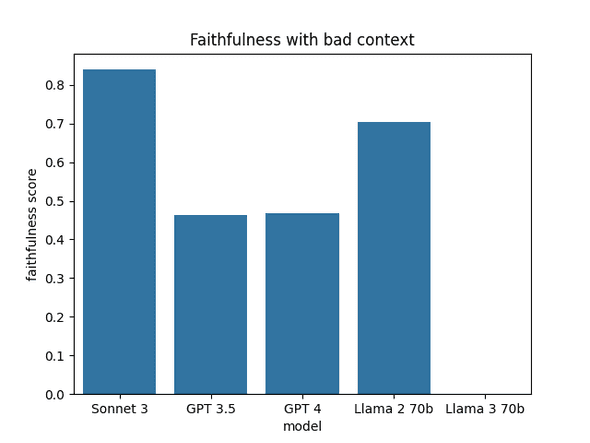

On the subset of questions with poor retrieval our Ragas-computed faithfulness scores ranged from 0%, as judged by Llama 3, to more than 80%, as judged by Claude 3 Sonnet. We emphasize that these were faithfulness scores evaluated by different LLMs judging the same retrievals, responses, and generated answers. Even if you exclude Llama 3 as an outlier, there is a lot of variation.

This variation in scores doesn’t seem to be an intentional choice (to the extent that LLMs can have “intent”) by the evaluator LLMs, but rather a situation of corner cases compounding one another. We noticed that this confusing situation made some models — Llama 3 most often, but also other models — fail to respond in the JSON format expected by the Ragas library. Depending on how you treat these failures, this can result in missing metrics or strange scores. You can sidestep these issues somewhat if you have thorough evaluation across the entire RAG pipeline: if other metrics are flagging poor retrieval, it matters less that your generation metrics are behaving strangely on poorly-retrieved examples.

In general, there’s no good substitute for careful human evaluation. The LLM judges don’t agree, so which one agrees best with ground truth human evaluations (and is the agreement good enough for your application)? That will depend on your documents, your typical questions, and on future releases of improved models.

Conclusion

Oh, were you hoping we’d tell you which LLM you should use? No such luck: our advice would be out of date by the time you read this, and if your data doesn’t closely resemble our benchmark data, then our results might not apply anyway.

In summary, it’s easy to compute metrics for your RAG application, but don’t just do it blindly. You’ll want to test different LLMs for driving the metrics, and you’ll need to evaluate their outputs. Your metrics should cover all the sides of the RAG triad, and you should know what they mean (and be aware of their corner cases) so that you can interpret the results. We hope that helps, and happy measuring!

- The terminology is not quite settled: what Ragas calls “faithfulness,” TruLens calls “groundedness.” Since the RAG Triad was introduced by TruLens, you’ll usually see it used in conjunction with their terminology. We’ll use the Ragas terminology in this post, since that’s what we used for our experiments.↩

Behind the scenes

Joe is a programmer and a mathematician. He loves getting lost in new topics and is a fervent believer in clear documentation.

Nour is a data scientist/engineer that recently made the leap of faith from Academia to Industry. She has worked on Machine Learning, Data Science and Data Engineering problems in various domains. She has a PhD in Computer Science and currently lives in Paris, where she stubbornly tries to get her native Lebanese plants to live on her tiny Parisian balcony.

Maria, a mathematician turned Senior Data Engineer, excels at blending her analytical prowess and software development skills within the tech industry. Her role at Tweag is twofold: she is not only a key contributor to the innovative AI projects but also heavily involved in data engineering aspects, such as building robust data pipelines and ensuring data integrity. This skill set was honed through her transition from academic research in numerical modelling of turbulence to the realm of software development and data science.

If you enjoyed this article, you might be interested in joining the Tweag team.