As software engineers at Modus Create, we are always on the lookout for tools that can enhance our productivity and code quality. The advent of AI-powered coding assistants such as GitHub Copilot has sparked excitement in the development community. Copilot code completions propose snippets at the current cursor that the user can quickly insert, while Copilot Chat allows users to discuss their code with an AI.

These tools promise to revolutionize software development, allowing engineers to focus on higher-level tasks while delegating implementation details to machines. However, their adoption also raises questions:

- Do they genuinely improve developer productivity?

- How do they affect code quality and maintainability?

- Which users and tasks benefit the most from these AI-driven coding assistants?

This blog post explores the challenges of measuring the impact of AI tools in our software engineering practices, with a focus on GitHub Copilot. Note that the data discussed in the post was collected in Q2 2024. We expect that GitHub Copilot has improved since then; we have also not yet had the opportunity to quantitatively investigate newer interfaces to AI development, like Cursor or Windsurf.

“Developer Productivity”

At Modus Create, we’re passionate about improving the experience of developers, both for our own teams and those at clients. We have been working for years on tools that we think improve developer productivity, for instance with Nix, Bazel, Python, and many more. But measuring developer productivity is a notoriously difficult task.

At the heart of this question lies the nature of software development itself. Is it a productive activity that can fit scientific management, be objectively measured, and be optimized? Part of the research on developer productivity goes down this path, trying to measure things like the time it takes to complete standardized tasks. Another trend suggests that developers themselves can be their own assessors of productivity, where frameworks like SPACE are used to guide self-assessment. Each of these angles has strengths and weaknesses. To get as broad a picture as possible, we tried to use a bit of both. We found, though, that data collection issues made our task timings unusable (more on this below). Therefore, all our conclusions are drawn from self-assessments.

Our in-house experiment

To gain a deeper understanding of the impact of GitHub Copilot at Modus Create, we designed and conducted an in-house experiment.

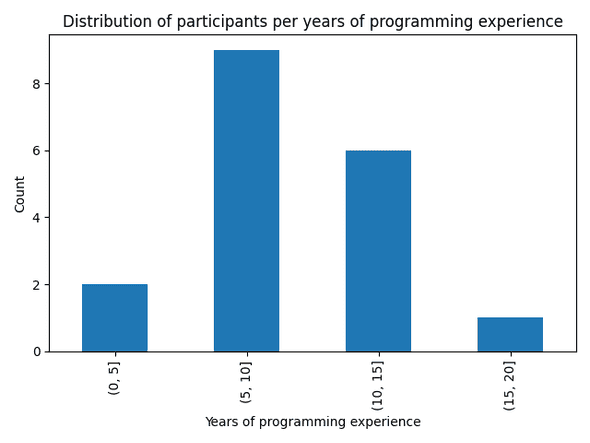

We managed to recruit 22 participants in total, ranging from Junior to Principal software engineers. They had a wide range of programming experience.

The experiment consisted of four coding tasks that participants needed to complete using Python within an existing codebase. The tasks were designed to evaluate different aspects of software development:

- Data ingestion: Loading and parsing data from a file into a Pandas

DataFrame - Data analysis: Performing statistical computations and aggregations using Pandas’

groupbyoperations - Test development: Writing tests using Python’s

unittestframework - Data visualization: Creating interactive plots using the Streamlit library

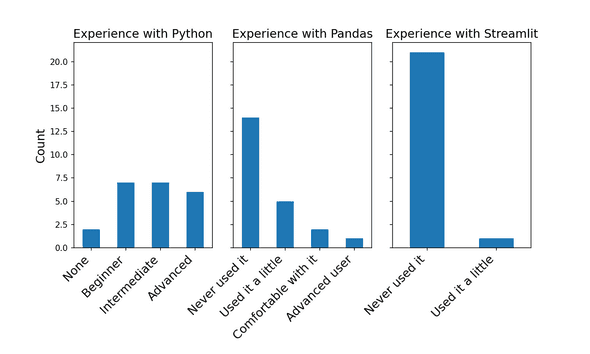

Participants had varied levels of experience with the required tools. Most participants had at least a little bit of Python experience, but Pandas experience was less common and hardly anyone had used Streamlit before.1

Upon completion of the assigned tasks, all participants completed a comprehensive survey to provide detailed feedback on their experience. The survey consisted of approximately 50 questions designed to assess multiple dimensions of the development process, including:

- Assessment of participant expertise levels regarding task requirements, AI tooling and GitHub Copilot proficiency

- Evaluation of task-specific perceived productivity

- Analysis of the impact on learning and knowledge acquisition

- Insights into potential future GitHub Copilot adoption

Perceived productivity gains

We asked participants the following questions.

| Question | Choices |

|---|---|

| If you didn't have Copilot, reaching the answer for task X would have taken... |

|

This question was core to our study, as it allowed us to directly measure the perceived productivity gain of using Copilot versus not using it.

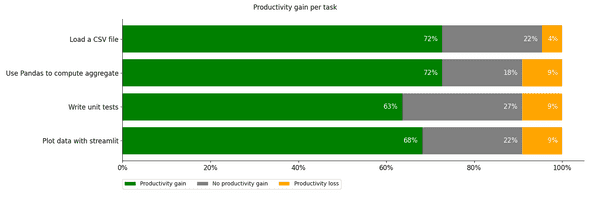

The result was clear: almost every Copilot user felt more productive using Copilot on every task.

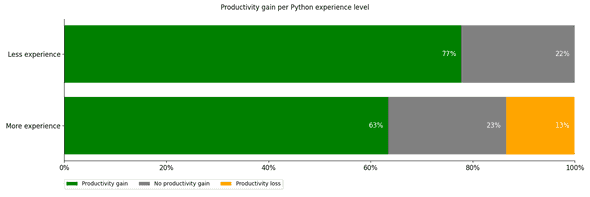

We also broke out the same data by Python experience level, and found that more experienced Python users found less productivity gain than less experienced users. In this plot, we grouped the “no Python experience” and “beginner” users into the “less experienced” group, with the rest of the users in the “more experienced group”.

To better understand how participants tackled these tasks, we collected information by asking for each task:

| Question | Choices |

|---|---|

| Which of the following have you used to complete task X? |

|

We were also interested in comparing these usages across profiles of developers, so we asked this question as well:

| Question | Choices |

|---|---|

| How would you describe your Python level? |

|

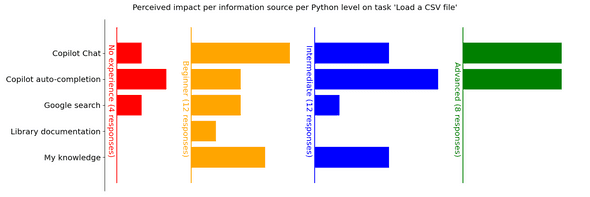

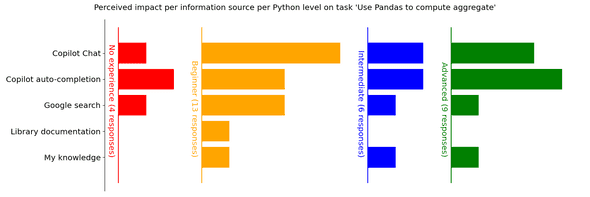

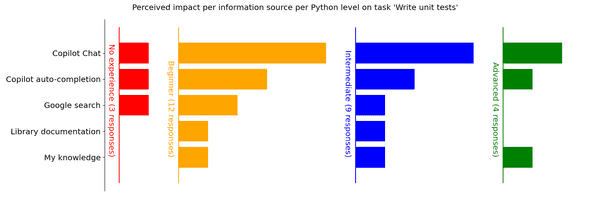

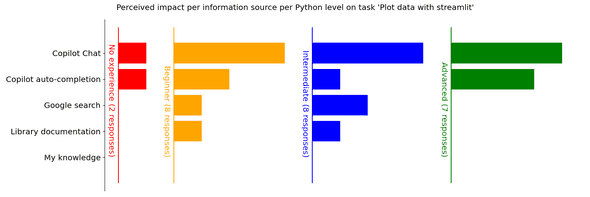

We could then visualize how participants who felt more productive with Copilot solved each problem, and see if there were variations depending on their profile. Since each participant could choose multiple options, sometimes there are more responses than participants.

Apparently, people don’t like library documentation. Also, we thought it was strange that the most experienced Python users never reported using their own knowledge. It would be interesting to dig more into this, but we don’t have the data available. One theory is that when reviewing AI suggestions everyone relied on their own Python knowledge, but experienced users took that knowledge for granted and so didn’t report using it.

Among people who felt more productive on tasks “Write unit tests” and “Plot with Streamlit”, we really see more usage of Copilot Chat than other sources.

Our hypothesis is that these tasks typically require making more global changes to the code or adding code in places that are not obvious at first. In these scenarios, Copilot Chat is more useful because it will tell you where and what code to add. In other tasks, it was clearer where to add code, so participants could likely place their cursor and prompt Copilot for a suggestion.

This is supported by the questions we asked:

| Question | Choices |

|---|---|

| Which of the following do you think is true? |

|

This question uses checkboxes, so respondents were not restricted to a single answer.

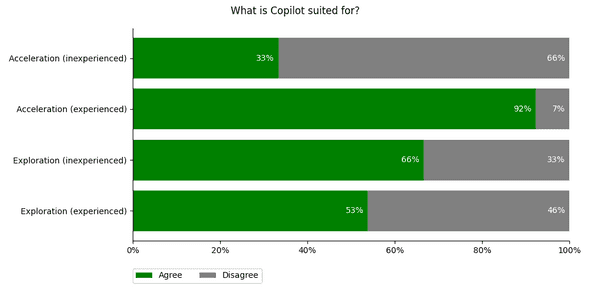

On average, participants thought Copilot was suited for both acceleration and exploration, but with some notable differences depending on experience level: experienced Pythonistas strongly favored Copilot for acceleration, while less experienced users thought it was better for exploration.

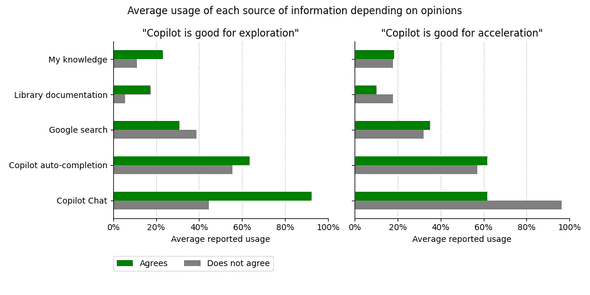

We also found that the participants’ perspective on acceleration versus exploration seems related to the usage of Copilot Chat.

The most interesting part of this chart is that participants who think Copilot is good for exploration or bad for acceleration relied most heavily on Copilot Chat. This suggests that users find the autocomplete features more useful for acceleration, while the chat features — which allow general questions, divorced from a specific code location — are useful for exploration. But it is interesting to note how usage of Copilot Chat versus autocomplete is correlated with how users perceive Copilot as a whole.

For more on acceleration versus exploration with Copilot, this OOPSLA23 talk which inspired to ask this question is worth watching.

Copilot will make code flow

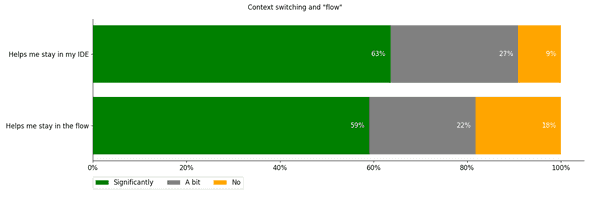

The SPACE framework mentions “flow” as an important aspect of productivity.

Some research associates productivity with the ability to get complex tasks done with minimal distractions or interruptions. This conceptualization of productivity is echoed by many developers when they talk about “getting into the flow” […].

This concept of flow is really interesting, because it is a way to measure productivity that is not based on outputs, but rather on the experience of the developers themselves. And although “flow” might be subjective and perceptual, studies have linked it to higher productivity and reduced stress; see this open-access book chapter for a readable overview of the research.

To get an idea of Copilot’s impact on flow, we asked the following questions:

| Question | Choices |

|---|---|

| Did Copilot decrease your need to switch out of your IDE (for example to search for answers or check the documentation)? |

|

| Did Copilot enhance your capacity to stay in your development flow? |

|

The results were unambiguous: most users found that Copilot helped significantly, and a strong majority found that it helped at least a little.

Learnings from organizing the experiment

Although the experiment went well overall, we noted a few challenges worth sharing.

First, ensuring active participation in the experiment required a collective effort within the company. Spreading the word internally about the experiment and looking for participants is an effort not to be underestimated. In our case, we benefited from great support from internal leaders and managers who helped communicate with and recruit participants. Even so, we would have liked to have more participants. It turns out that engineers are sometimes just too busy!

Second, keeping participants focused on the experiment was harder than expected. We had asked participants to make a git commit at the end of each task, thinking that we could use this data to quantify the time it took for each participant to complete their tasks. When looking at the data, we were surprised to see that the time between commits varied widely and was often much longer than expected. When asked, several participants reported that they had to interrupt our experiment to deal with higher-priority tasks. In the end, we discarded the timing data: they were too limited and too heavily influenced by external factors to provide useful conclusions. For the same reason, we haven’t even mentioned yet that our study had a control group: since the timing data wasn’t useful, we’ve omitted the control group entirely from the data presented here.

The ideal scenario of securing dedicated, uninterrupted time from a large pool of engineers proved impractical within our organizational context. Nevertheless, despite these limitations, we successfully gathered a meaningful dataset that contributes valuable perspectives to the existing body of research on AI-assisted development.

Further references

Speaking of other work out there, there’s a lot of it! It turns out that many people are excited by the potential of code assistants and want to understand them better. Who knew? Here is some further reading that we found particularly interesting:

-

Experiments at Microsoft and Accenture introduced Copilot into engineers’ day-to-day workflow and measured the impact on various productivity metrics, like the number of opened pull requests; they found that Copilot usage significantly increased the number of successful builds. They had a much larger sample size than we did — Microsoft and Accenture have a lot of engineers — but unlike us they didn’t specifically consider the uptake of unfamiliar tools and libraries.

-

A research team from Microsoft and MIT recruited developers from Upwork, gave them a task, and measured the time it took with and without Copilot’s help; they found that Copilot users were about 50% faster. They did a better job than we did at measuring completion time (they used GitHub Classroom), but we think our exit survey asked more interesting questions.

-

The Pragmatic Engineer ran a survey about how engineers are using AI tooling, covering popular tools and their perceived impact on development.

Conclusion

Our experiment provided valuable insights into the impact of GitHub Copilot on developer experiences at Modus Create. Overall, developers reported increased productivity and a more seamless workflow. Participants used Copilot extensively in specific coding scenarios, such as automated testing and modifying code that used libraries they were unfamiliar with, and they felt more productive in those cases.

It was particularly interesting to see how the interface to the AI assistant (chat vs. completion) affected participants’ opinions on what the assistant was useful for, with chat-heavy users prioritizing exploration over acceleration and completion-heavy users the other way around. As interfaces and tooling continue to evolve — faster than we can design and run experiments to test them — we expect them to play a huge role in the success of AI-powered code assistants.

- We made a small mistake with the wording in Pandas and Streamlit questions: we gave them the options “I have never used it”, “I have heard of it”, “I have used it before in a limited way”, “I am comfortable with it”, and “I am an advanced user”. The problem, of course, is that these responses aren’t mutually exclusive. Given the order the responses were presented in, we think it’s reasonable to interpret “I have never used it” responses to mean that they’d heard of it but never used it. For the plot, we’ve combined “I have never used it” and “I have heard of it” into “Never used it”.↩

Behind the scenes

Guillaume is a versatile engineer based in Paris, with fluency in machine learning, data engineering, web development and functional programming.

Nour is a data scientist/engineer that recently made the leap of faith from Academia to Industry. She has worked on Machine Learning, Data Science and Data Engineering problems in various domains. She has a PhD in Computer Science and currently lives in Paris, where she stubbornly tries to get her native Lebanese plants to live on her tiny Parisian balcony.

Joe is a programmer and a mathematician. He loves getting lost in new topics and is a fervent believer in clear documentation.

If you enjoyed this article, you might be interested in joining the Tweag team.